Exact, Exacting: Who is the Most Accurate World Champion?

Do chess players dream of electric fish?

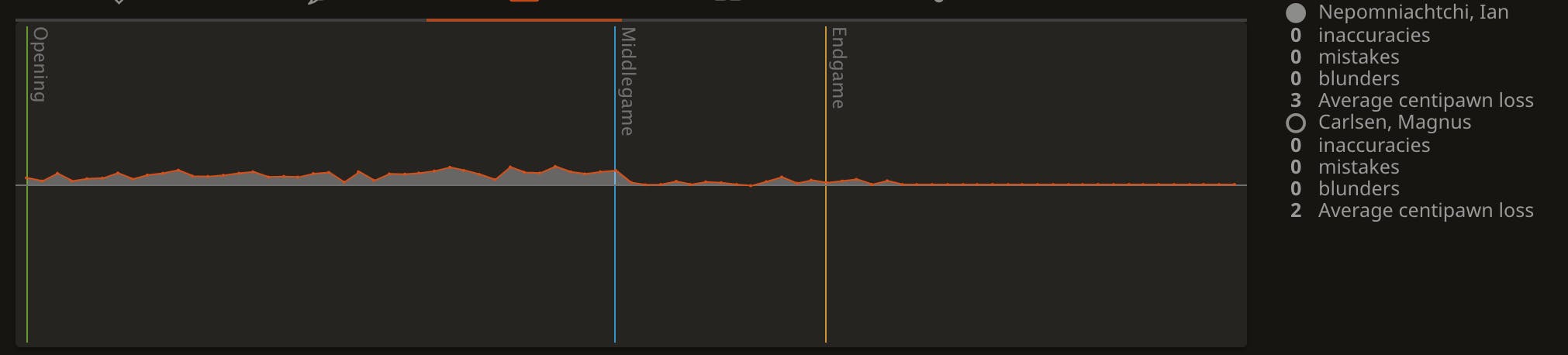

After round 3 of the FIDE World Championship 2021 came to a draw between GM Magnus Carlsen and GM Ian Nepomniachtchi, the Lichess broadcast chat immediately pounced upon the incredible accuracy the two players displayed.

The broadcast chat can occasionally get a bit... excitable (particularly with what it thinks are blunders), but we decided to check it out. For various reasons, some of our team know the lifetime average centipawn loss (ACPL) of some top players throughout history, and of previous FIDE World Championship matches. So we immediately knew that the accuracy as determined by computer analysis did indeed seem to be really quite low, even by super-GM standards: 2 ACPL for Magnus Carlsen, and 3 ACPL for Ian Nepomniachtchi.

So, our man on the ground in Dubai felt comfortable asking “how do both players feel, having played what appears to be one of the most accurate FIDE World Championship games played in history, as assessed by engines?” At the time, the team had only manually checked through the 2010s - but after getting the answers from the players, we decided to fact-check ourselves and investigate the question more deeply.

A Brief History of Chess Engines and ACPL

But first, let’s take a step back. Some may be feeling confused by what computer analysis is, or what ACPL really means. So, let’s discuss computer analysis, chess engines, and ACPL briefly.

Almost since computers have existed, programmers have tried creating software which can play chess. The father of modern computing, Alan Turing, was the first recorded to have tried, creating a programme called Turochamp in 1948. Too complex for computers of the day to run, it played its first game in 1952 (and lost in under 30 moves against an amateur).

The Ferranti Mk 1 computer, the model Turochamp ran on. Note the many cupboards filled with hardware wired up to it

Since then, the software has improved significantly, with Deep Blue created by IBM famously defeating GM Garry Kasparov in 1997 — a landmark moment in popular culture where it seemed machines had finally overtaken humans. Chess software (now known as “chess engines”) continued to improve, and became capable of assessing and evaluating the best lines of chess to be played.

Stockfish is the name of one such chess engine — which just so happens to be free, open source software (just like Lichess). Stockfish’s community had made it one of the strongest chess engines in the world, able to trivially beat the strongest chess players in the world running on a mobile phone, when it was pitted against Google DeepMind’s AlphaZero in 2017. Stockfish 8 (the number referring to the version of the software) was completely annihilated by AlphaZero, in what remains to be a somewhat controversial matchup.

But even if Stockfish really had been fighting with one arm behind its back, it was still clearly outclassed. Consequently, the Neural Network method of assessing and evaluating positions that was initially used in Shogi engines was eventually implemented in Stockfish, later also in collaboration with another popular and powerful chess engine inspired by AlphaZero, called Lc0 (or Leela chess Zero). The latest version of this fruitful cooperation is known as Stockfish 14 NNUE, which is the chess engine Lichess uses for all post-game analysis when a user requests it, and the chess engine we used to measure the accuracy of all World Championship games.

Lichess's evaluation of the Round 3 game using Stockfish 14 NNUE

To help evaluate the accuracy of positions and gameplay, engines present their evaluation in centipawns (1/100th of a pawn). For example, if a move lost 100 centipawns, that’s the equivalent of a player losing a pawn. It doesn’t necessarily mean they actually physically lost a pawn — a loss of space or a worse position could be the equivalent of giving up a pawn physically.

The average centipawn loss (ACPL) measures this centipawn loss across an entire game — so the lower the ACPL a player has, the more perfectly they played, in the eyes of the engine assessing it.

What we did

With that background out of the way, on to our process. We decided to run all World Championship games through Stockfish 14 NNUE, to try and rank their accuracy, and see if our initial claim was correct.

The first step was deciding what counted as World Championship matches, then collecting them all, and compiling them. This wasn’t as simple as it sounds. FIDE has only existed since 1924, but there are historic matches unofficially treated as World Championships. Likewise, the World Championship crown was briefly split between two competing organisations, and we had to consider how to treat that split.

Following most modern commentators, we decided the first historic match which was worthy of the World Championship title was played in 1886. We also recognised some of the PCA tournaments, and a Kramnik match, before FIDE reunified in 2007.

Once that was decided, we located the PGN (algebraic notation) of the historical games, and made a Lichess study for each match, with a chapter each for a game.

After that step, all of the games and all of the matches were analysed by Stockfish 14 NNUE. However, the data still needed to be cleaned and prepared to make it structured and readable. Some of our developers collaborated on how to do this, with some fine-tuning of code required. A brief summary of this process follows, from the developer team:

“After downloading the analysed PGNs, we essentially replicated the process that Lichess uses to calculate the ACPL for a game — the code is available on GitHub for those interested in delving deeper. But at the end we had a CSV file with every game from WCC history, alongside the ACPLs of the players and the combined ACPL.”

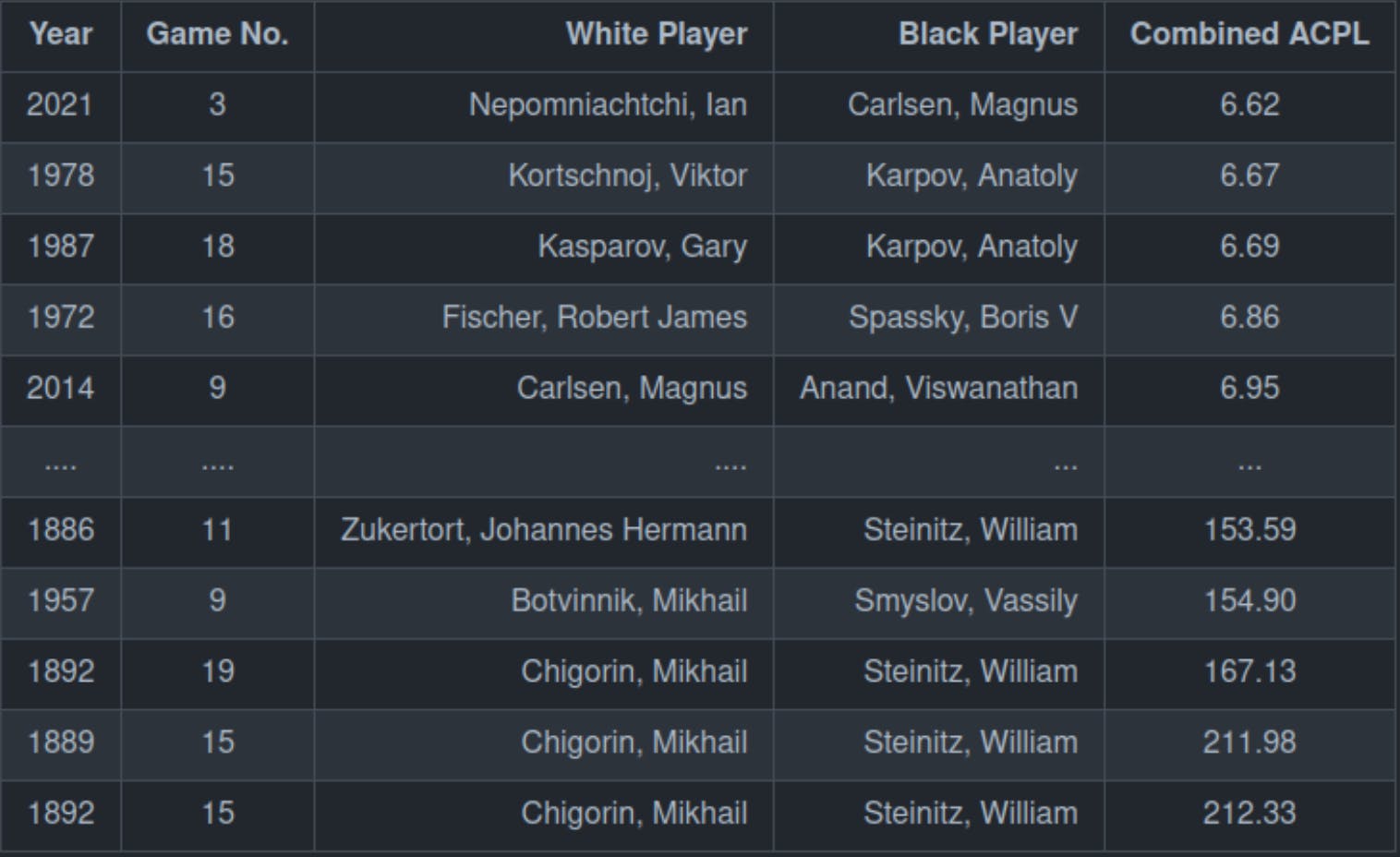

The top 5 most accurate and bottom 5 least accurate World Championship games played after engine analysis

With the data prepared and cleaned, it was time for the data to be visually presented, to make it more easily interpreted by humans. From a simple tabular form, it was clear that the initial claim was supported — and rather than the round 3 game being “one of the most accurate games played in a World Championship” it was actually the most accurate ever played in World Championship history.

The charts

A variety of charts were created, but generally all showed the same trends. We ignored forfeits (Fischer and Kramnik forfeited).

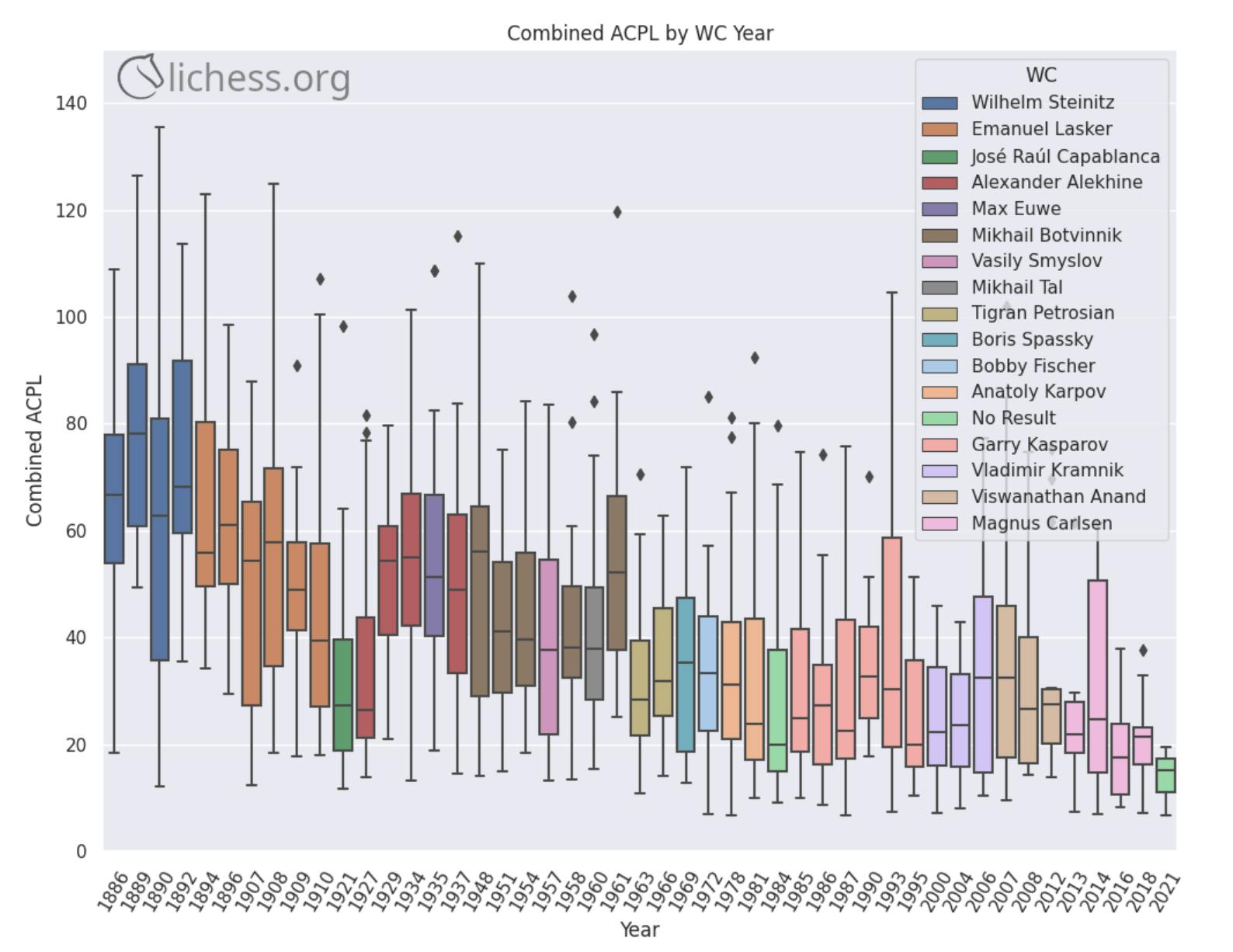

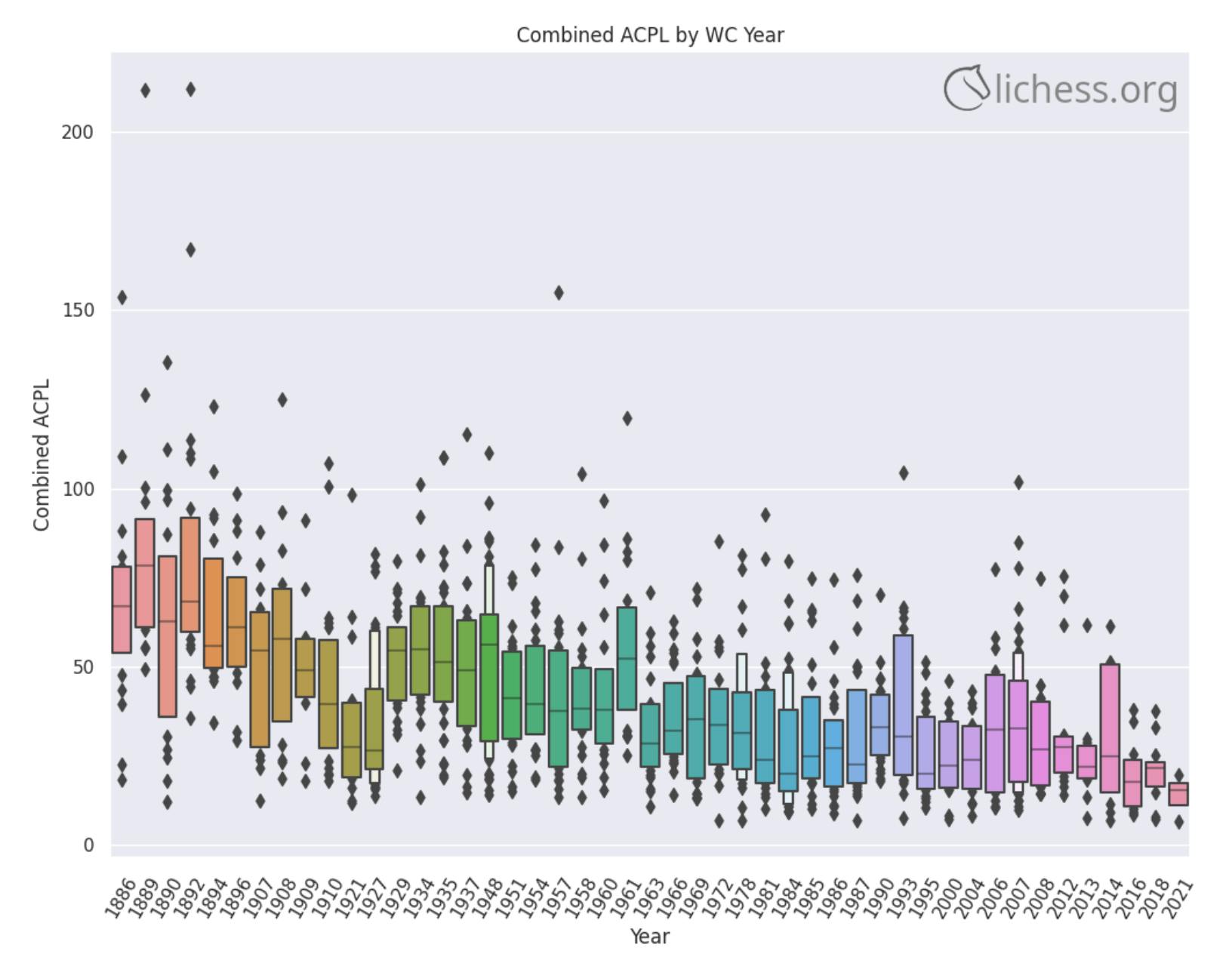

Box and whisker plot representing the accuracy of World Championship matches played that year

Over time, it can be seen that chess has trended from being played quite inaccurately even at the very highest levels, to being played with almost a laser precision.

For example, at the Victorian end of the scale, there are some outlier games which have over 200 ACPL combined - many modern bullet games between club level players are played more accurately!

The World Champions Lasker and Capablanca then quickly improved the quality, to be comparable with the modern pre-computer era.

The bars represent interquartile range and median for ACPL of games played that year

Following Botvinnik, Smyslov and Tal, the range of the ACPL generally tightened, with less significant fluctuations, and with less dramatic outliers.

In the computer era, from around 2007, the ACPL of players dropped further and tightened even more significantly — showing the importance chess engines have played within top level chess. Anand can be seen in particular as embracing the role of computers and significantly improving his accuracy over time, largely in parallel as chess engines developed strength.

And, despite only being 3 games in at the time of the analysis, the current World Championship appears to be on track as the most accurate yet.

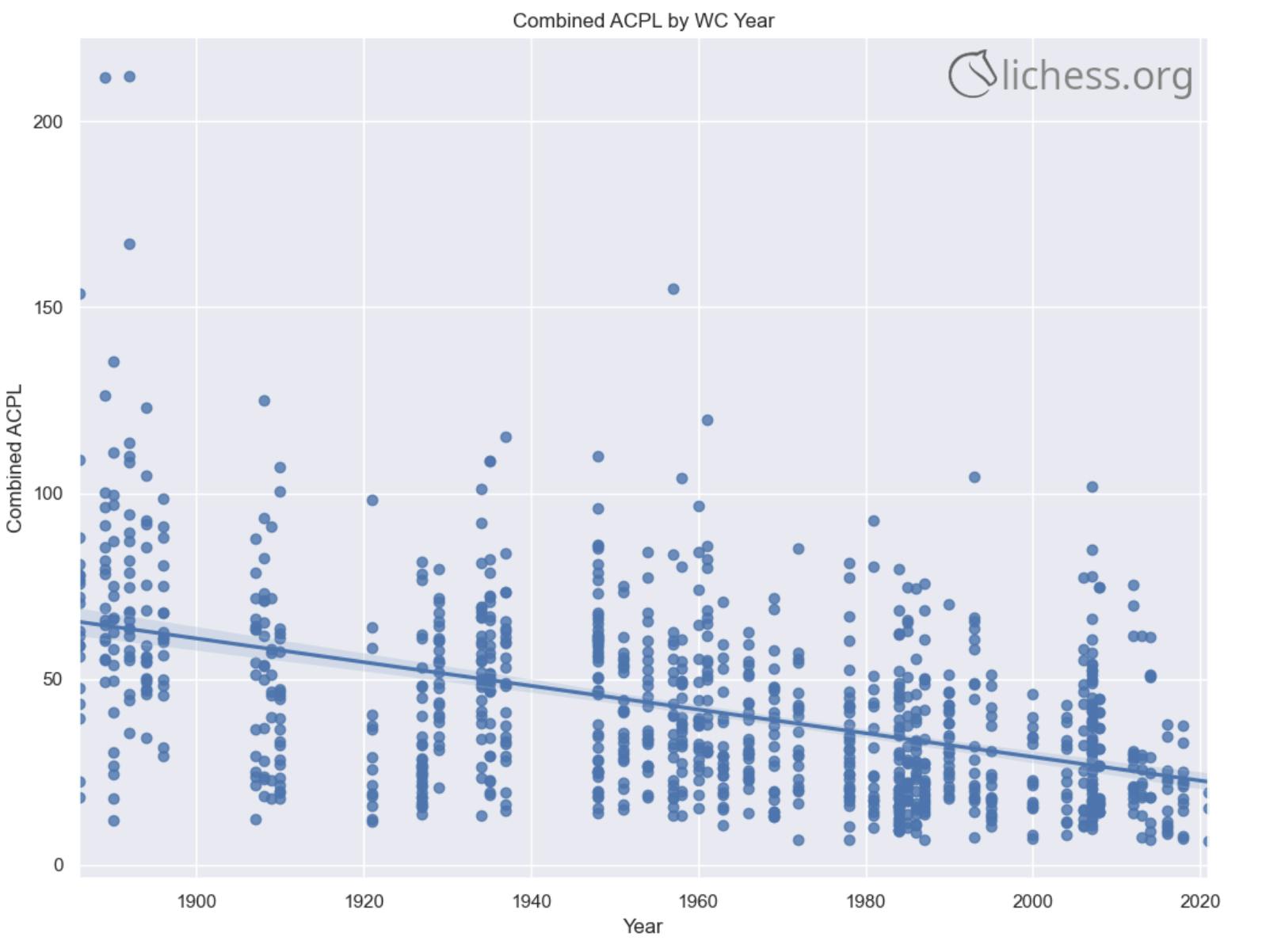

Showing the line of best fit over time

Perhaps surprisingly, on their best days even the champions from the 1910s and 1920s played with a comparable accuracy to those who came later. It’s also incredibly remarkable just how accurate some of the games played prior to the computer era were, with names like Fischer, Kasparov and Karpov all appearing in the top 5 most accurate games (other than Carlsen, only Karpov appears twice). But unsurprisingly, the biggest jumps have undoubtedly come from those chess players who treated the game as a science, rather than as an art, and the enduring improvement and impact chess engines have had on the chess elite.

But, as Magnus Carlsen’s pithy response highlights (“I’m very proud, but it’s still only half a point”), whilst this stuff is interesting overall, it practically means very little in the context of competition if the players aren’t able to convert the accuracy to points. Ian Nepomniachtchi was equally nonchalant when asked how he felt to be part of the most accurate game in World Championship history: “that’s a very murky question to ask before the anti-doping tests”.

Conclusion

The best chess players humanity has to offer are potentially thinking more like machines than ever before — or at least playing in a style which has the approval of the strongest chess engines; likely the very same chess engines they use to prepare and train against.

Thanks to reddit user ChezMere for the idea

At the time of publishing, the last decisive game in the World Championship was game 10 of the World Championships 2016 — 1835 days ago, or 5 years and 9 days. Is the singularity being reached, with man and machine minds melding towards inevitable monochromatic matches?

Some bias of chess engines must be touched upon, regardless. There is a light at the end of the tunnel. Humans aren’t machines, regardless of how much a player may imitate or learn from them. Generally, all of the most accurate games played were relatively short. They usually featured openings with multiple moves allowing accurate play, or very deeply theorised lines. Pieces — which allow for complications and human-like mistakes to creep in — were often equally exchanged very quickly. The endgames that they moved into were usually all theoretical or forced draws, with all moves being equally accurate. So, reintroducing those longer games, or those complications into the mix — and we’re still far from the singularity. All of the most accurate games would have been purely after the computer era, otherwise.

So, whilst many fans of chess may feel surprised by the number of draws occurring in the World Championship, it’s too soon to say if that’s due to the increasing accuracy within chess. After all, even prior to engines the 1984/5 World Championship match between Anatoly Karpov and Garry Kasparov featured 17 successive draws (game 10 to game 27) and then another 14 successive draws (game 33 to game 47). Sometimes, the best in the world just can’t get past each other.

Proviso

Various things should be considered when reviewing the data.

First of all, if you would like to try and reproduce it, you’re welcome to, and please let us know what results you get. It’s likely you’ll get slightly different results from us, based on the parameters you use (for example, the depth of the search), or even the machine you run the engine on. Each machine will often give some small fluctuations, even with the same parameters, so whilst your top 5 games should still be the same, that can’t be guaranteed.

Secondly, players often train with computers much more powerful and at a much deeper depth than the analysis we used to make the leaderboard. So, the ACPL we obtained may just be an artefact of that difference of depth. What’s considered bad by Stockfish 14 NNUE at depth 20, could be considered good at depth 45.

Thirdly, as touched on in the article, if the players are preparing and training with Stockfish 14 NNUE, it will prefer the moves and recommendations it would make itself. Consequently, both players could just be playing most accurately in the eyes of Stockfish 14 NNUE, simply because they’re using and following the lines of Stockfish 14 NNUE. If we’d used a different chess engine, even a weaker version of the same one — such as Stockfish 12 — it may have found the 2018 World Championship the most accurate in history (assuming both players prepared and trained using Stockfish 12 in 2018).

Fourthly, this is all viewed in the eyes of an engine. It shouldn’t discount the brilliant chess being played. It doesn’t discount the human analysis made by commentators, the engine is not a divine being. These are just some interesting findings and a bit of an exploration of them. Nothing more should be read into it than that!

Special thanks

All this was made possible by the Lichess community, but primarily by:

- BenWerner

- NoJoke

- Revoof

- Seb32

- Somethingpretentious

The full code, data and charts can be found in this GitHub repo.