Stockfish 12 on Lichess

Shorter waits for stronger analysis

Three months after its groundbreaking release, Stockfish 12 (or rather the current development version of Stockfish 13) is finally available on Lichess, for both server and client side analysis.

If you’re not interested in all the technical details, here’s a short summary:

- Stockfish 12 NNUE is more than 100 Elo stronger than the previously used version of Stockfish 11.

- Stockfish 12 (classical, not NNUE) is now available for local analysis.

- Analysis tabs once again remember if you turned the engine on or off.

A new version of fishnet, Lichess's distributed analysis software, is available. Updates include:

- Optimized "queuing" of games for analysis, meaning shorter waits.

- Picking the best build of Stockfish for the contributor's hardware from the additional available targets.

- Consistent analysis quality.

- Rewrite in Rust (from Python).

Stockfish 12

Among other improvements, Stockfish 12 brings a major new feature, NNUE (or ƎUИИ, for Efficiently Updatable Neural Network). NNUE optionally replaces the handcrafted evaluation function with a neural network.

The main innovation is the ability to incrementally update the evaluation after moves, instead of evaluating the entire neural network from scratch. Stockfish remains a CPU based engine, and provides various build targets to progressively take advantage of modern vector instructions.

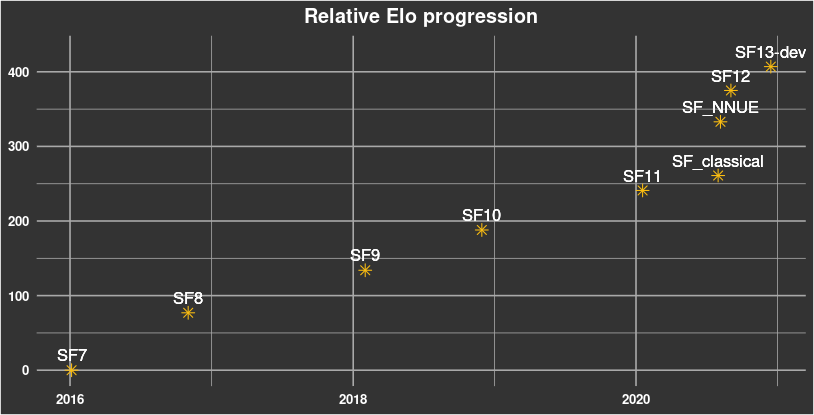

The following graph shows the strength improvements over the last Stockfish releases. SF_classical is the final development version before SF_NNUE landed. After the jump to SF_NNUE, some gains are specific to NNUE, others apply also to Stockfish with NNUE turned off.

Congratulations to all Stockfish contributors!

fishnet v2, rewritten in Rust

Server side analysis on Lichess is brought to you by fellow users who volunteer their CPU time using the fishnet client. fishnet started as a short and simple Python script that accumulated a lot of features over time.

To pay back technical debt, it has now been rewritten in Rust. Rust’s type and ownership system is amazing at catching issues at compile time, and it was refreshing to be able to confidently do major refactorings on concurrent code.

fishnet is using tokio for asynchronous communication with the Lichess API, a local queue, the engine processes, and the user who can stop the client at any time. Each of these are asynchronous tasks that talk over channels.

Being a systems programming language, Rust also has convenient access to detect CPU features and pick the best corresponding Stockfish build, rather than this terrible hack in Python, which uses ctypes to allocate executable memory and runs hardcoded machine instructions. This is important, because Stockfish 12 benefits immensely from the availability of vector instructions and brings a much wider array of possible target features.

Other improvements include:

- fishnet now available for aarch64, with Stockfish built using emulation on GitHub actions.

- Will now use multi-variant Stockfish only for variant games or games with exotic material combinations, for a ~10% efficiency improvement.

- The decision to value consistency and debuggability more than utmost efficiency. Your server-side analysis will now have consistent quality, no matter which client provided the analysis. This means using the smallest common denominator, which is 1 thread and small hashtables. It also means using NNUE even on old hardware. The next section explains how fishnet v2 delivers stronger analysis and does so more quickly, regardless.

- Node targets are tuned to take the same time as Stockfish 11 on middle-aged x86-64-sse41-popcnt CPUs. (In this sense, 4,000,000 classical nodes appear to be equivalent to 2,250,000 NNUE nodes.) This calibration means you are actually getting a strength improvement out of this update, rather than just converting all gains into efficiency. Older CPUs (the minority) will take longer to reach the node target. Newer CPUs (the majority) are able to reach the node target more quickly.

Thanks to all fishnet contributors! If you are still running v1, you will hear from us soon, nudging you to upgrade. You can find instructions to upgrade or how to start contributing in the README.

fishnet queuing

Server-side analysis requests on Lichess are appended to one of two queues: The “user queue”, for analysis requested by humans and the system queue for automated analysis (for example, as a first step to identify suspicious games for a closer look).

The user queue needs to be processed very quickly, because the user might be actively waiting for the result. The system queue is allowed to build some backlog at peak time, but should be cleared during the day.

Each fishnet client uses the queue status and an estimate of its own performance to see where it would be most useful. Consider a slow fishnet client with 1 core. If the user queue is normal, it will simply do system analysis and let faster clients handle the user queue. It will pick up work from the user queue only if it estimates that it can finish a game before a faster client has a chance to do so.

For the time being, fishnet batches always consist of complete games (with openings coming from stored cloud evaluations). So each analysed game can be attributed to a particular client.

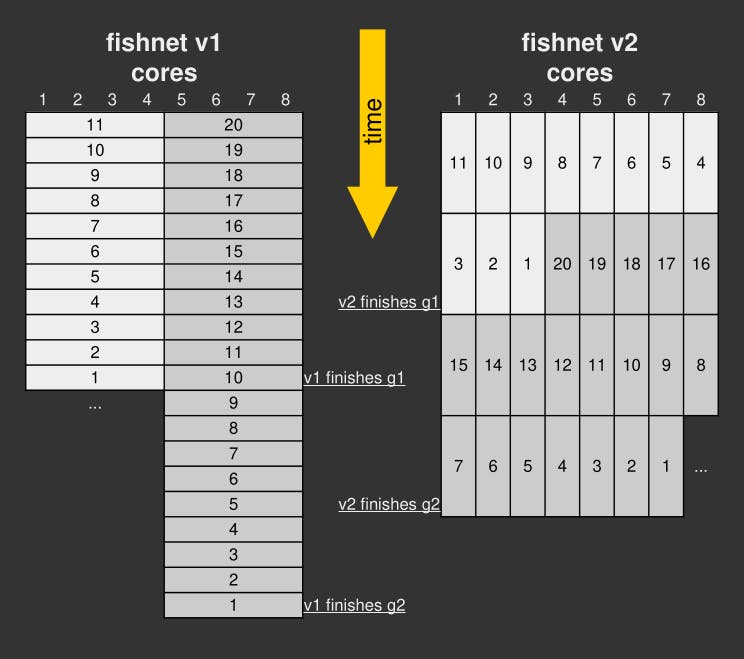

Most games have more positions than the typical number of CPU cores, so some queuing is happening locally as well. For simplicity, the old Python client was putting available cores into groups, and then running mostly independent workers for each group. The diagram below shows an idealized scenario with an 8 core fishnet client analysing 2 short games of 11 and 20 positions. (In reality, not all positions will take equal time.) fishnet v2 lets each core individually pull from a local queue, which in turn pulls from the Lichess API, if empty. This change means that all analysis will finish much faster on average, without taking longer in the worst case.

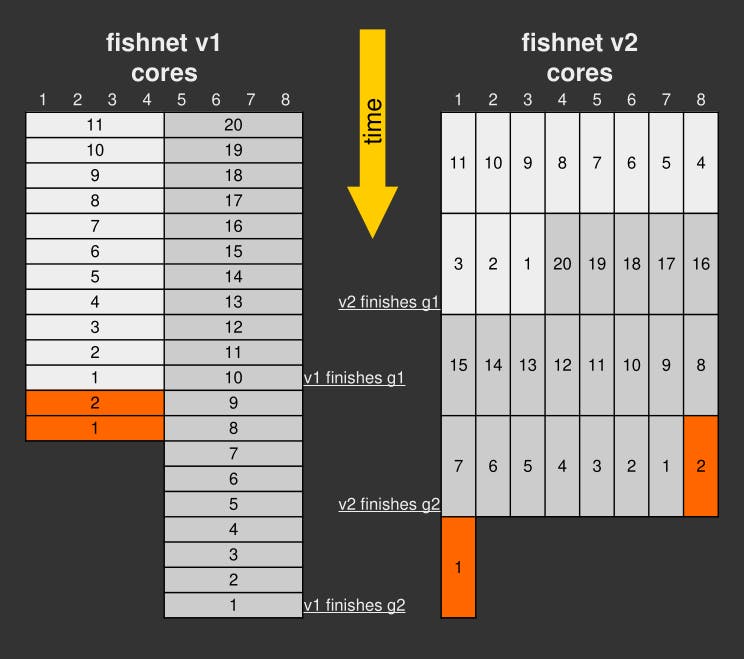

You may notice that the last statement is not entirely true (especially with the switch to single-threaded analysis). A short game (like the orange one in the following diagram) can take slightly longer, if only a few positions remain for analysis in the final time step. This effect is negligible on longer games, and short games are … well … short anyway.

Stockfish 12 in WebAssembly

Lichess maintains a fork of Stockfish with WebAssembly support, but Stockfish 12 NNUE is not quite ready for the web.

A small obstacle is that the compressed neural network file is about 10MB. For comparison, all of Lichess’s compressed client side assets of all pages, JavaScript, CSS, all board themes, all 2d and 3d piece themes, and all sound sets, sum to 35MB, a figure that we try hard to keep low.

Time will show how much the Elo gap between NNUE and Classical eval increases, and what appears to be the best tradeoff for client side analysis.

More importantly, the WASM build of Stockfish 12 NNUE appears to be inexplicably slow, even considering the absence of vector instructions in WebAssembly. In some sense this might be good news. Maybe the slowness is due to a bug or oversight that can be easily fixed once found, rather than a more fundamental issue. Experimental WASM SIMD gives a speedup, but it is insufficient, given the bad base performance. If you know your way around C++, emscripten and WebAssembly, tackling this issue would be an amazing contribution. The last attempt can be found in this pull request, which is simply stockfish.wasm with a hack to make the EvalFile available in memory.

Nonetheless, Stockfish 12+ delivers considerable improvements even with NNUE turned off, so let’s withhold it no longer! We have updated the client side engine to the strongest available build. We have also taken this opportunity, to once again remember the Stockfish toggle state (at least per tab/session).