Introducing Maia, a human-like neural network chess engine

A guest post from the Maia Team

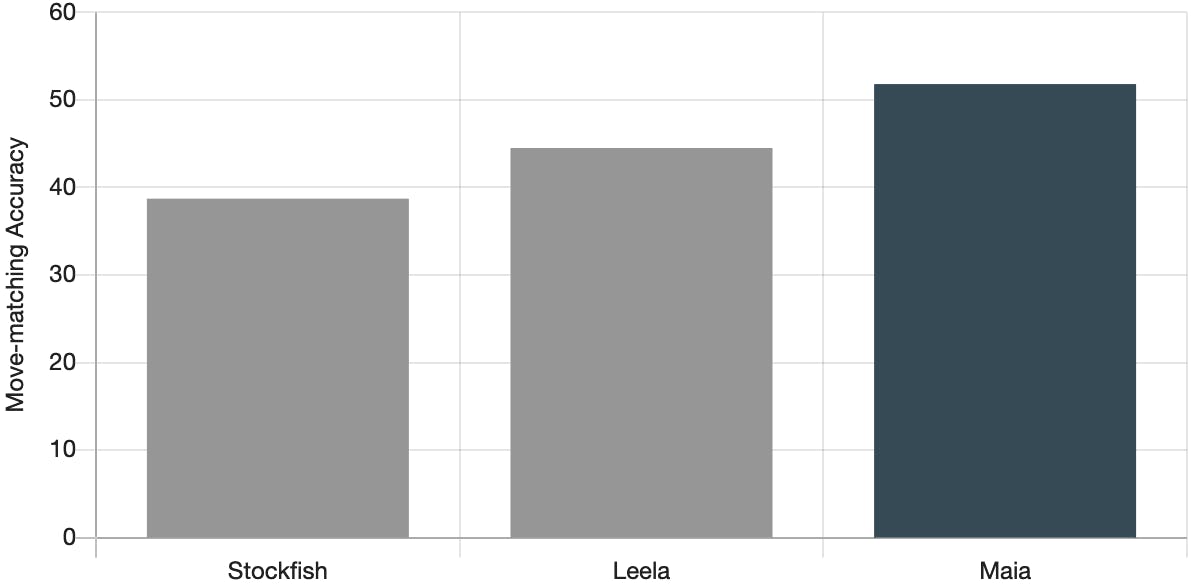

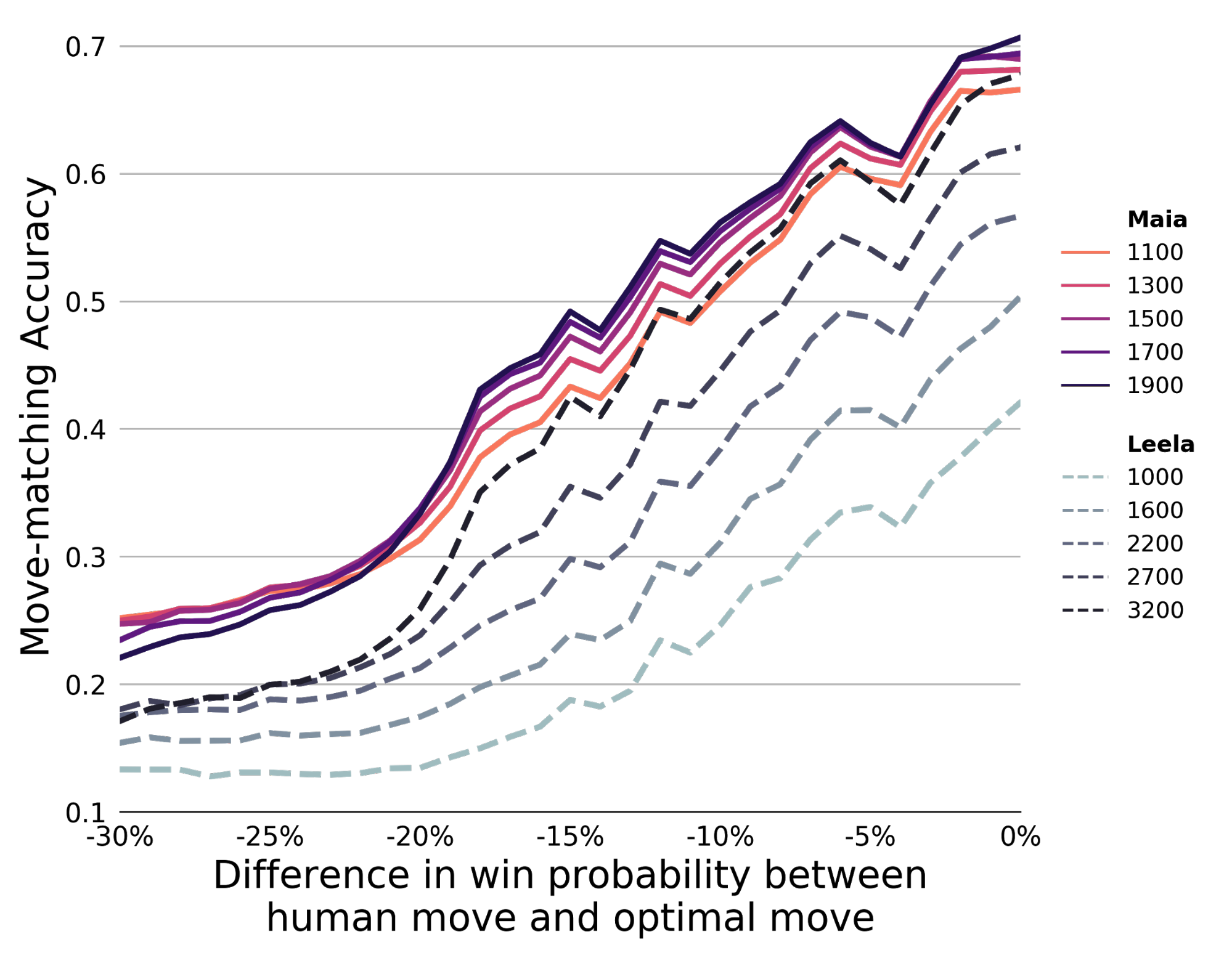

We're happy to announce Maia, a human-like neural network chess engine that was 100% trained on Lichess games. Maia is an engine built in the style of Leela that learns from human games instead of self-play games, with the goal of making human-like moves instead of optimal moves. In a given position, Maia predicts the exact move a human will play up to 53% of the time, whereas versions of Leela and Stockfish match human moves around 43% and 38% of the time respectively. As a result, Maia is the most natural, human-like chess engine to date, and provides a model of human play we will use to build data-driven chess teaching tools.

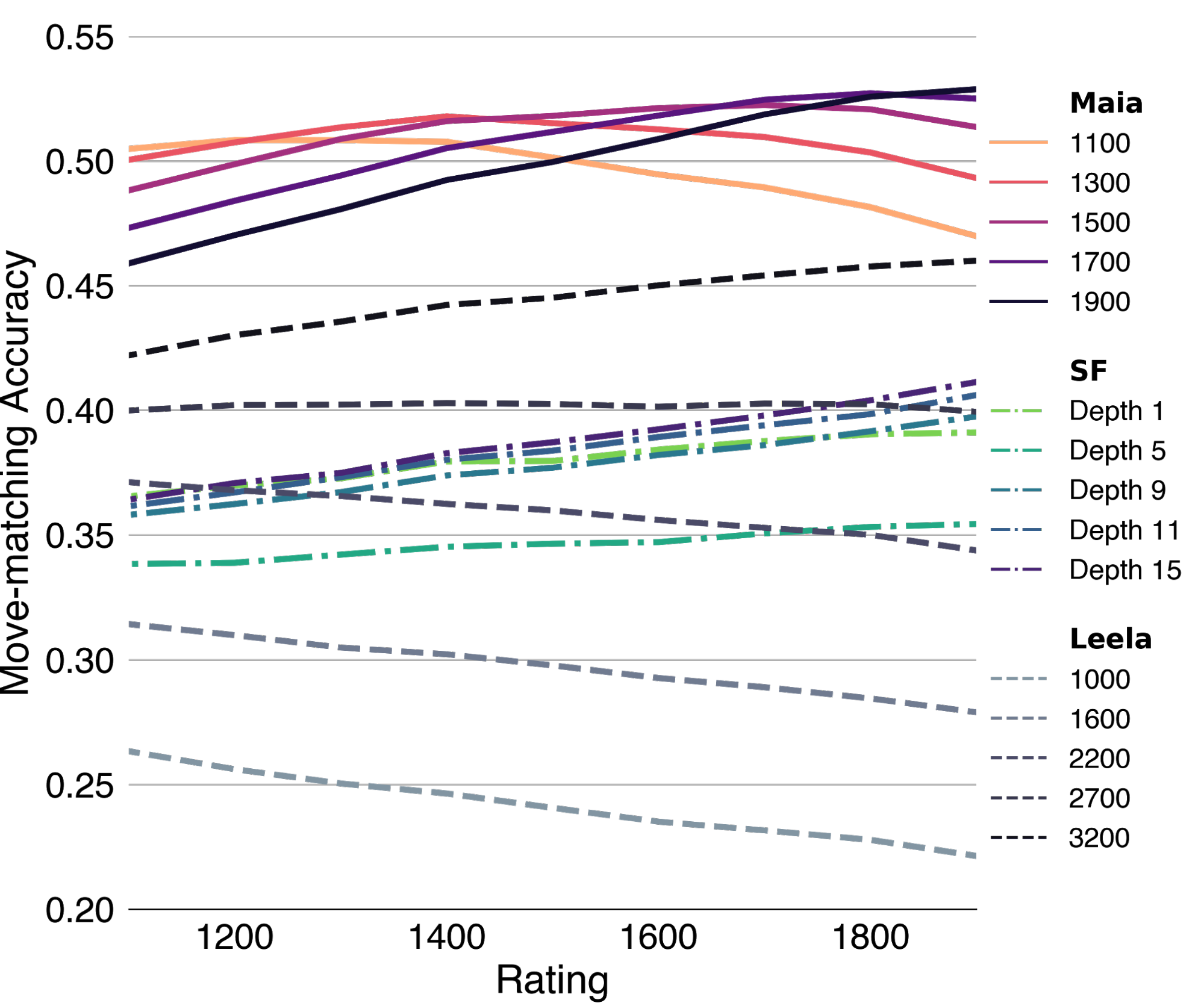

We trained 9 versions of Maia, one for each rating milestone between 1100 and 1900, on over a total of 100 million Lichess games between human players. Each Maia captures human style in chess at its targeted level: Maia 1100 is most predictive of human play around the 1100 level, and Maia 1900 is most predictive of human play around the 1900 level. You can play Maia on Lichess: @maia1 is Maia 1100, @maia5 is Maia 1500, @maia9 is Maia 1900, and @MaiaMystery is where we experiment with new versions of Maia. If you play them, please tell us what you think on Twitter, in the Maia Bots group, or by email.

Note that each Maia plays at a level above the rating range it was trained on, for an interesting reason: Maia’s goal is to make the average move that players at its target level would make. Playing Maia 1100, for example, is more like playing a committee of 1100-rated players than playing a single 1100-rated player—they tend to average out their idiosyncratic mistakes (but Maia still makes a lot of human-like blunders!).

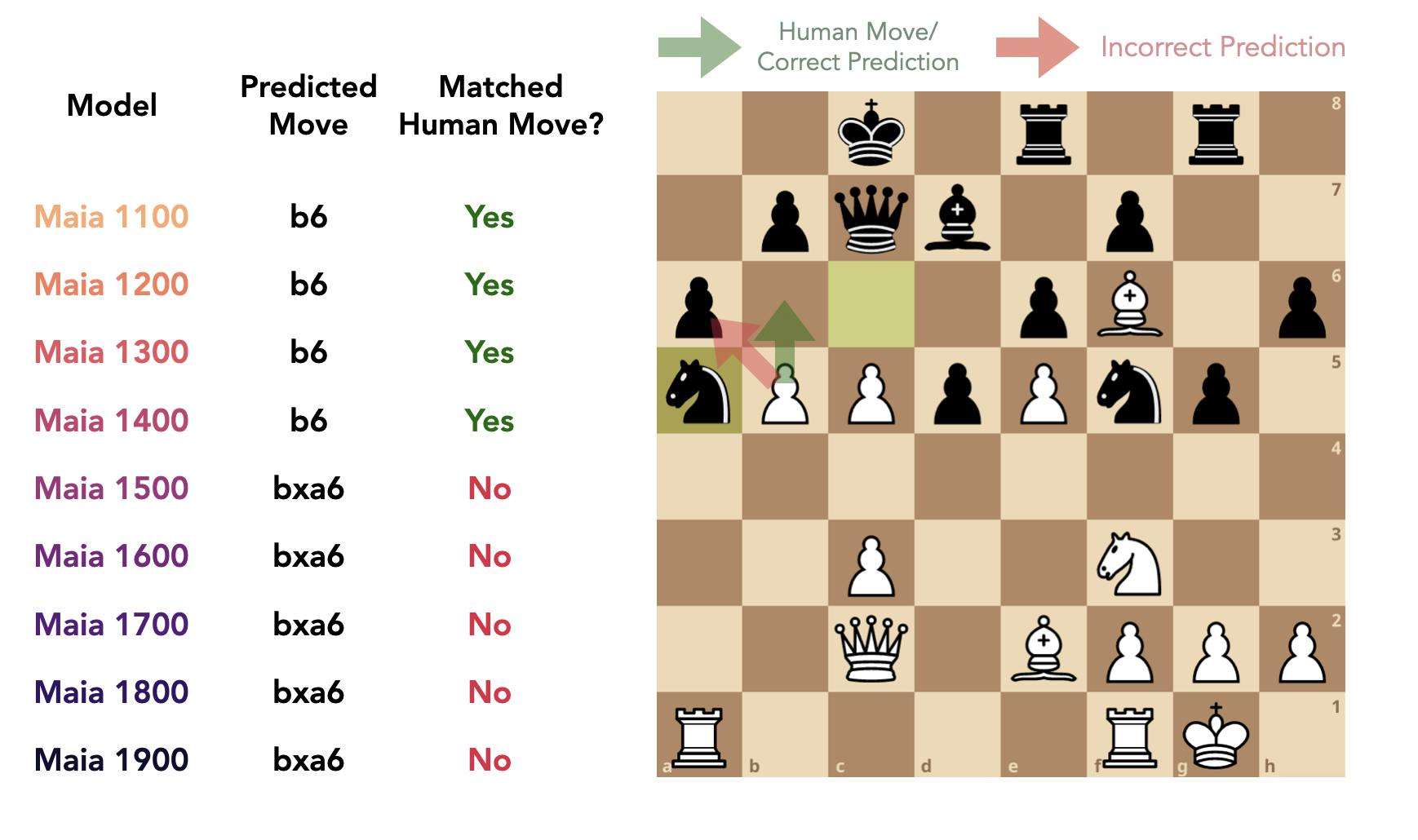

Because we trained 9 different versions of Maia, each at a targeted skill level, we can begin to algorithmically capture what kinds of mistakes players at specific skill levels make – and when people stop making them. In the example below, the Maias predict that people stop playing the tempting but wrong move b6 (the move played in the game) at around 1500.

Even when a human makes a terrible blunder – hanging a queen, for example – Maia predicts the exact mistake made more than 25% of the time. This means that Maia could look at your games and tell you which blunders were predictable, and which were more random mistakes. Guidance like this could be valuable for players trying to improve: If you repeatedly make a predictable kind of mistake, you know what you need to work on to hit the next level.

This is an ongoing research project using chess as a model system for understanding how to design machine learning models for human-AI interaction. We plan to release beta versions of learning tools, teaching aids, and experiments based on Maia (analyses of your games, personalized puzzles, Turing tests, etc.). If you want to be the first to know, you can sign up for our email list here. For more details about the Maia project, head over to the Maia website, read our published research paper, or see the Microsoft Research blog post about the work.

Thank you to Lichess for making this project possible with the Lichess Game Database!