How training puzzles are generated

A tale of data re-use: How we make puzzles from your games.

Lichess has just reached 30 million games played, and nearly 700 000 of them have been computer analysed; so what should be done with all this information?

This is a question that I asked myself about 5 months ago. It seemed like a waste to have so many games analysed, looked at once or twice and then forgotten about. So I started asking myself how all of this information could be assimilated so everyone in the lichess community could learn from the mistakes of their peers.

The most obvious answer that came to mind was to generate training puzzles. After solving several thousand puzzles on other services I had a good idea about how puzzles are created; and with a reasonable amount of programming experience I had a pretty good idea on how to make a system for this purpose.

Primarily there are two main puzzle types: mating puzzles, and material advantage puzzles.

Mate in N Puzzles

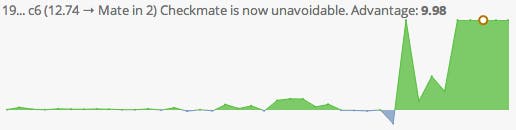

Mating puzzles were the first type to be scripted as they were the most straightforward to think about. It's simple, a pre-processor examines a game from the lichess database, highlights where a game went from a centipawn evaluation to a "Mate in N" evaluation; then sends the position to the main program, which in turn generates a puzzle.

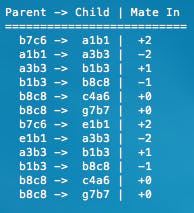

The next stage is a bit more complicated. A position that is given a "Mate in N" evaluation can have a broad array of solutions, branching off of each other until the final determination. It's not enough to simply parse the position to Stockfish and copy-paste the single solution that it suggests and call that the solution to the position, even evaluating multiple lines from the starting position is not enough.

Now for some technical jargon

Naturally, a recursive algorithm would need to be employed. At each stemming position, a list of potential moves needs to be generated, the bad moves pruned (removed), and then new positions (or nodes) are added to the solution tree.

On each node Stockfish runs for a couple of seconds, with multi_pv set to 50 (i.e. Stockfish is asked to evaluate 50 potential moves). The UCI output is then examined by a Regular Expression to generate a list of potential moves. Each of these moves is then individually examined by Stockfish to a greater depth to ascertain an accurate evaluation. Moves that result in checkmate in the minimum amount of moves are also solutions; moves that result in checkmate in 1 or 2 extra moves are considered retries, meaning the user can make another attempt to find the correct solution.

"Why not allow users to continue in longer mate in N lines?", the problem is that it's very difficult to determine if progress is being made. Maybe the line that they are attempting to follow can be repeated constantly. In initial versions of the script I allowed longer solutions to be played, but this resulted in the script becoming stuck in an infinite loop.

Once the script head reaches checkmate, the recursive function 'unravels' itself, compiling the completed solution tree in the process.

Material Advantage Puzzles

Material advantage puzzles are significantly more complicated than Mate in N puzzles, a few reasons why this is the case:

- The end point is not clearly defined. At what point should a material advantage puzzle stop?

- The objective is not clearly defined. Okay, the script is looking for lines where material is exchanged, that's simple enough, but when has enough material been collected? +2, +3, +9 - a Queen, and two bishops.

- How should longer solutions be handled? Maybe the solution is reasonably deep.

- What about counter-attacks from the computer? Should the player be expected to avoid mate theats?

- Perhaps the starting position doesn't have a tactical puzzle: how to know when to stop searching.

And that's just to name a few. All of these questions had to be formed into equations and reasoning, so I'll try to explain succinctly.

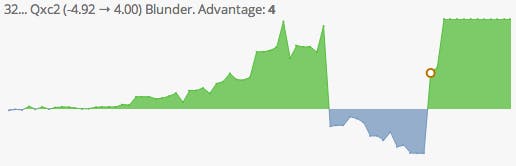

To start with, similarly to the mate in N puzzles, a pre-processor examines the advantage graph for when a player makes a significant mistake.

A situation such as the one highlighted above would be a prime location to find a tactical puzzle. The advantage has changed from -5 to +4, so there's probably a way for white to gain a significant amount of material back.

Generating the puzzle

Similarly to Mate in N puzzles, a recursive algorithm is used to generate the solution tree. Stockfish is still used in a very similar way to generate potential moves, but the conditions for continuing in the creation of a puzzle are far more complicated.

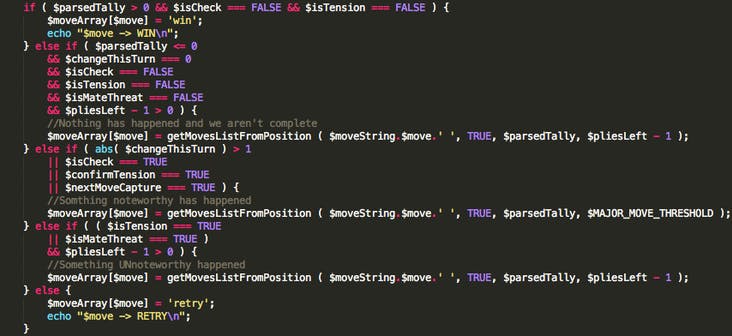

Yes, that's PHP. No, it's not perfect. But it gets the job done quite nicely.

These few lines of code are attempting to determine if the script should continue generating the puzzle, if it's reached the end of a good line, or if this continuation results in no solution. It took MANY generations to develop a working heuristic for this.

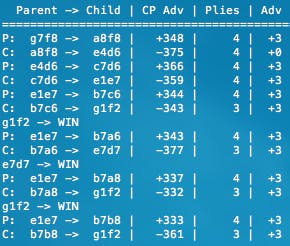

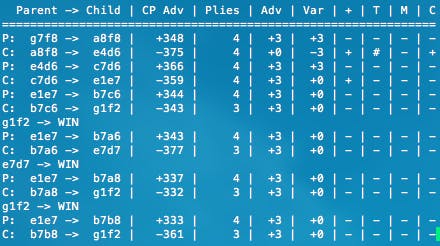

From left to right:

- P or C: P = Player Move, C = Computer Response

- Parent -> Child: The parent node/move and the child node/move that is being evaluated

- CP Adv: The current computer evaluation of the position for the side that is playing

- Plies: How many plies remain until the script aborts creating the puzzle

- Adv: The material advantage for the player, 1 = Pawn, 3 = Bishop or Knight, 5 = Rook, 9 = Queen

- Var: The amount the material advantage has changed this turn

- +: Is either side in check?

- T: Tension, is a weaker piece such as a pawn one move away from capturing a bishop or rook?

- M: Is either side under threat of mate?

- C: Is the next move a capture by inspecting Stockfish's most optimal move? If Tension is true this sub-process is triggered.

These results are parsed to the snippet of code above, and are all used to determine if and how the script should continue. In English:

- A line that gains adequate material in a reasonable amount of time is a solution.

- A line is complete when no more material can be gained; the player is no longer in check; there is no threat of being mated, and no more material can be captured.

- Alternate solutions are lines that also gain material in adequate time, and are within 10% of the top move's evaluation.

- Moves that are within 20% of the top move's evaluation are given re-attempts.

On a side note, it took roughly 700 lines of code to determine if a position is check for either side.

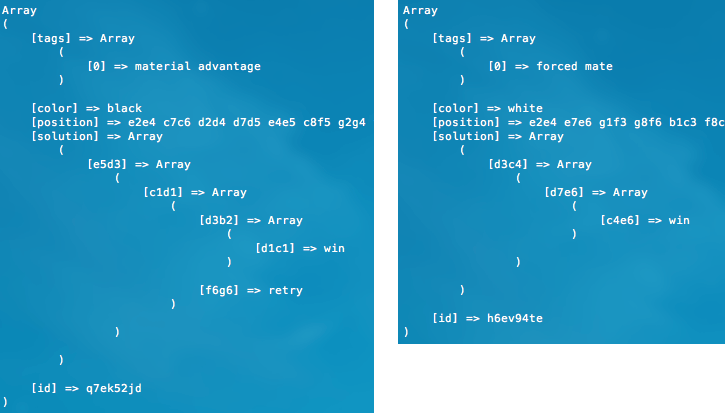

The packaged solution

Once all this processing is complete, the final tree solution is turned into a JSON string and shipped off to become available for solving.

In the tradition of lichess openness and code freedom, this script is open source and released under the MIT license on GitHub: clarkerubber/problem-creator.

Some Statistics

Today roughly 60 000 puzzles have been generated by this script. In total there has been more than 4 million puzzle attempts! On average that's roughly 70 attempts per puzzle, but some have been attempted more than 17 000 times! (see here)

What's next?

This is just one possible application for the large database of games that have been played at lichess. In the future I plan to help make tools that are tailored to the individual; the mistakes that they commonly make, and the openings and style of play that they prefer.

What do you think could be done with the large database of games on lichess? If your idea's good enough, it might just be implemented. Discuss in the comments section below.